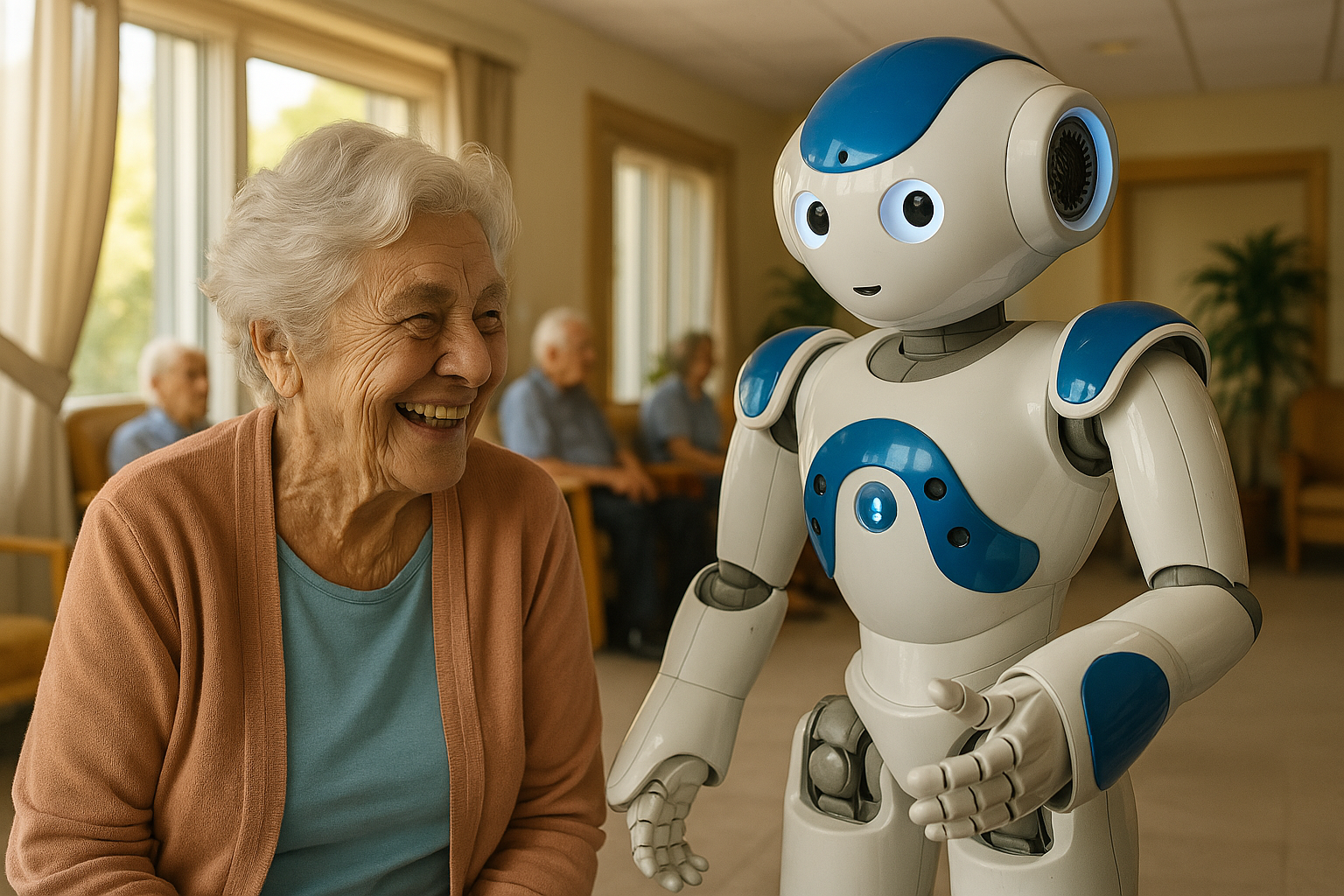

The rapid evolution of healthcare robotics promises to assist an aging population with everyday health needs – from reminding older adults to take medications to providing companionship and advice. As these robotic advisors become more prevalent in elder care, a crucial question arises: will seniors trust these robots, and thus follow their guidance? Trust is fundamental in any caregiving relationship, and perhaps even more so when the caregiver is a robot. In sensitive contexts like elder care, robots must navigate not only physical assistance but also emotional support and ethical boundaries. Gaining the trust of older adults is paramount to ensuring they accept a robot’s presence and comply with its health-related advice or instructions. This article examines how robots can build trust through both narrative (the content and style of their communication) and behavior (their actions and social cues), thereby encouraging compliance with healthcare guidance. We will explore the factors influencing trust in eldercare robots, strategies for trust-building via narrative and behavior, and the ethical considerations of deploying robotic advisors in the care of older adults.

Why focus on trust and compliance? In healthcare, even human providers know that a patient’s trust directly affects whether they follow medical advice. Similarly, an older adult is more likely to accept help or heed wellness recommendations from a robot if they perceive it as trustworthy and caring. Effective robotic advisors could improve medication adherence, encourage exercise and healthy habits, check on wellbeing, and reduce loneliness – but only if older users are comfortable engaging with them and believe in their reliability. On the other hand, a lack of trust can lead to rejection of the technology or refusal to follow its suggestions, negating the potential benefits. Thus, understanding how to foster trust and appropriate compliance is key to integrating robots into eldercare successfully.

In the sections that follow, we discuss the unique trust factors at play between older adults and robots in healthcare roles. We look at proven techniques to build trust via narrative (for example, through storytelling, explanations, or empathetic dialogue) and via observable behavior (such as consistent performance, social cues, and demonstrations of empathy). We also address challenges – like avoiding manipulative persuasion or over-reliance – and ethical safeguards to ensure that trust is earned and not abused. By drawing on up-to-date research and real-world examples, this comprehensive review sheds light on how robotic advisors can become trusted partners in care for seniors, ultimately improving compliance with health interventions and enhancing the well-being of older people.

The Importance of Trust and Compliance in Eldercare Robotics

In eldercare, trust can be defined as a senior’s willingness to rely on a robot’s assistance or advice despite vulnerabilities, having positive expectations of the robot’s intentions and behavior. An older person might be physically or emotionally vulnerable, so trusting a robot means they feel the robot will not harm, deceive, or neglect them. Compliance, in this context, refers to the degree to which older adults follow the health-related advice, recommendations, or requests made by the robot – for instance, taking a pill when the robot reminds them, or engaging in an exercise the robot suggests. High trust usually increases the likelihood of compliance, whereas distrust may lead the person to ignore or reject the robot’s guidance.

In practical terms, building trust and compliance in eldercare robotics matters because:

- Adoption and Acceptance: Older adults are less likely to accept a robot in their home or care facility if they don’t trust it. Trust is often cited as a prerequisite for older people to even begin using technology, especially something as novel as a healthcare robot. If trust is low, the robot might be viewed as intrusive or unreliable, leading to non-use.

- Safety and Well-being: Many healthcare tasks (medication dispensing, monitoring vital signs, emergency assistance) are safety-critical. If the older adult doesn’t comply with a robot’s alerts or instructions (for example, an alert to take medication or a suggestion to call a doctor), their health could suffer. Trust in the robot’s competence and honesty is essential so that seniors take its warnings or advice seriously, improving safety.

- Effective Intervention: Robots aimed at improving health outcomes – such as reminding patients with dementia of daily tasks or guiding physical therapy exercises – only work if users willingly follow along. Studies show that when older adults trust a health advisor robot, they are more receptive to its recommendations and maintain better adherence to health regimens. In one experiment, seniors who reported higher trust in a robotic health advisor were indeed more likely to follow its advice about supplements and lifestyle, demonstrating the direct link between trust and compliance.

- Relationship and Engagement: For socially assistive robots that double as companions, trust enables a deeper engagement. An older person who trusts a robot may confide in it more, use it more frequently, and benefit from reduced loneliness. Without trust, interactions remain superficial and the potential therapeutic value (e.g. alleviating isolation or cognitive stimulation) is lost.

Conversely, mistrust or lack of compliance can be detrimental. If a senior doubts the robot’s capabilities or intentions, they might refuse to let it help with even benign tasks, or constantly seek human confirmation for its advice. This not only limits the robot’s usefulness but could cause frustration. In worst cases, severe distrust might lead an older adult to feel anxious or unsafe around the robot – the opposite of the intended outcome.

Striking the right balance is also vital. Care researchers highlight the need for appropriate trust: the goal is not blind trust or obedience, but a well-calibrated trust where the older adult trusts the robot as far as it is competent. Over-trusting a robot (for example, assuming it’s infallible) can be dangerous if the robot malfunctions or gives incorrect advice. Under-trusting (distrusting a capable robot) means missing out on help. Therefore, designing for trust in eldercare robots entails encouraging justified confidence and healthy skepticism where needed – essentially building a trustworthy system that deserves the user’s trust.

Given these stakes, researchers and designers are intensely studying what makes older adults trust robots and how to encourage compliance ethically. In the next section, we outline the key factors that influence trust in robotic advisors for seniors.

Factors Influencing Trust in Robotic Advisors for Older Adults

Trust between an older adult and a robot is multi-faceted. It depends on the robot’s attributes, the user’s characteristics, and the context of interaction. Understanding these factors is the first step in designing robots that seniors feel comfortable relying on. Recent studies and interviews with older adults have identified several critical trust factors:

- Competence and Reliability: Perhaps the most fundamental factor is the robot’s ability to perform its intended tasks effectively and consistently. Older adults need to see that the robot can do what it promises. This could mean accurately dispensing medication at the right times, giving sound health advice in line with what doctors say, or reliably detecting an emergency (like a fall) and responding. Any frequent errors, malfunctions, or inconsistencies can quickly erode trust. On the other hand, a track record of reliability builds confidence. In one survey, seniors highlighted “ability” and “reliability” as key support pillars for trusting a robotic care provider. They equated the robot’s competence with professional skill – much like they trust a nurse who is skilled and dependable.

- Safety and Security: Older adults tend to be cautious about safety, and rightly so. Trust grows if the robot is physically safe (moves carefully without bumping or tripping the user) and if it safeguards the user’s data and privacy. For example, a robot that handles a frail patient or assists in bathing must be designed to not cause injury and to preserve the person’s dignity and privacy. If seniors fear the robot could harm them physically or leak personal information, trust will be low. Ensuring safety features (like emergency stop, gentle grips, privacy settings) and communicating them to the user can build trust by showing that the robot is designed to protect them. Safety, in fact, was explicitly identified as a trust factor by older participants when imagining robots helping with personal care tasks.

- Anthropomorphic Design and Sociability: The robot’s appearance and interactive style play a big role in initial trust formation. Many eldercare robots are designed with some human-like or pet-like qualities – for instance, having a friendly face, expressive eyes, or a warm voice – to make them more approachable. Research suggests that a certain level of anthropomorphism can increase trust and likability, because people find it easier to relate to human-like agents. Older adults often appreciate a robot that looks “friendly” or “cute” rather than too industrial. In trials, a socially engaging robot interface (with gestures, a face, and emotional expressions) was rated as more trustworthy and empathetic by seniors compared to a plain technical interface. However, designers must balance this: if a robot looks very human-like but behaves awkwardly or lacks actual capabilities, it can trigger disappointment or even distrust (the “uncanny valley” effect of mismatched realism). Consistency between appearance and ability is critical – a robot that presents itself as a modest machine will be forgiven for acting like one, but one that appears human-level intelligent yet fails simple tasks will lose credibility.

- Empathy and Benevolence: Benevolence, or the perception that the robot has the user’s best interests at heart, is another pillar of trust. While robots do not literally have intentions, they can project a caring persona through their interactions. Older adults are more likely to trust a robot that seems empathetic, kind, and respectful. In interviews, seniors mentioned they would trust a robot that “cares about me” or one that behaves in a companionable manner. This includes the robot expressing concern for their well-being, using polite and patient language, and not rushing or ignoring them. A robot that remembers personal details (“Good morning, John. I hope you slept well.”) and inquires about their feelings (“How are you feeling today?”) demonstrates a form of benevolence and empathy that can greatly enhance trust. On the flip side, if a robot behaves coldly or is purely task-focused with no social niceties, older users might view it as just a machine and might not feel comfortable confiding in it or following its advice.

- Communication and Clarity: Effective communication is essential. For an older adult to trust what a robot says, the robot must speak (or display information) in a clear, understandable manner. This includes using appropriate language (avoiding technical jargon, speaking neither too fast nor too quietly), and being attentive to the user’s communication as well. In studies on trust, older adults valued robots that could listen and respond appropriately, almost like a good human caregiver would. If the user asks a question or expresses a concern, a trustworthy robot should acknowledge it and address it, rather than ignoring input. Miscommunications – such as the robot frequently not understanding the senior’s voice commands, or the senior not grasping the robot’s instructions – can frustrate users and chip away at trust. Therefore, natural and two-way communication builds trust: the senior feels “heard” and confident that they can understand the robot’s guidance correctly.

- Personalization and Familiarity: Trust can deepen as the robot becomes more familiar over time. If a robotic advisor personalizes its interactions to the individual – for example, learning the senior’s daily routine, remembering their preferences or family members’ names, and tailoring its suggestions accordingly – the older adult may come to see the robot as a kind of personal aide who “knows me well.” Personalization signals that the robot is paying attention and investing in the relationship, much like a human caregiver would over long-term care. This familiarity breeds trust and comfort. Many older users will start to treat a consistently present robot almost like a companion or even give it a nickname. Such personal bonding can, in turn, increase their receptiveness to the robot’s advice. Caution is needed, however, to maintain professional boundaries and not encourage unrealistic perceptions; the robot should personalize within appropriate limits and perhaps remind the user that it’s there to help with health (so the trust stays grounded in its role).

- Transparency and Explainability: Given the novelty of AI-driven advisors, transparency about what the robot is doing and why can foster trust. If a robot makes a recommendation (e.g. “It’s time to take your blood pressure medication”), a trust-enhancing approach might include a brief, simple explanation or reassurance (“I see it’s 8 PM, which is your scheduled time for Lisinopril. Taking it now keeps your blood pressure under control” or “because your doctor recommended this schedule”). This kind of explanation provides a narrative rationale, helping the user understand the purpose and validity of the advice. It prevents the robot from seeming like a “black box” issuing orders. Transparency can also mean the robot candidly acknowledging its own limits – for example, stating “I’m just a robot and not a doctor, so for new symptoms we should contact your physician.” Such honesty can increase trust by setting realistic expectations (the user knows the robot won’t bluff expertise). Studies suggest that trust in automated advisors is higher when the system can explain its suggestions in an understandable way, aligning with the broader principle that explainable AI is more trustworthy to users.

- User Autonomy and Control: Older adults often fear losing control to technology. Trust is reinforced if users feel they remain in charge of their care, with the robot as a supportive tool rather than an overlord. Design choices that give seniors a sense of agency – such as being able to override or snooze a reminder, to correct the robot if it’s wrong, or to set preferences – can help. When users know they can decline an activity or double-check information (just as they could with a human nurse), they are more likely to trust the robot’s presence. On the contrary, if a robot appears to force actions or make decisions without the user’s input, an older person might become wary or resistant. Therefore, a collaborative approach where the robot invites the user’s agreement (“Shall we do some light exercise now?”) rather than issuing commands (“Stand up now for exercise.”) tends to inspire more trust and willingness to comply. Designers have noted that older adults respond better to robots that act like partners or assistants instead of authoritative figures.

- Consistency and Predictability: Finally, human-robot trust builds with consistent positive interactions over time. Each successful, pleasant interaction – each day the robot correctly reminds and the user successfully follows through – adds a “trust credit.” Consistency in the robot’s personality and routine also matters; dramatic changes or unpredictable behavior can unsettle users. Older adults appreciate a reliable routine (e.g. greetings at known times, consistent tone of communication). Over time, as familiarity grows, older users often become more comfortable and trusting, engaging in more open dialogue and even friendship-like rapport. Longer-term studies indicate that trust can deepen with prolonged use: for example, seniors who interacted with a health-monitoring robot over weeks began to attribute empathic qualities to it and felt more at ease with its presence. However, consistency should be balanced with some adaptability (to not become too monotonous), which we will discuss later.

Below is a summary table of key trust dimensions specifically noted by older adults regarding robot caregivers, based on qualitative research findings:

| Trust Factor | Description | Illustrative Example |

|---|---|---|

| Professional Competence | The robot’s ability to perform health tasks accurately and safely (similar to a human professional’s skill). | Robot correctly dispenses the right pills at the right times every day, demonstrating accuracy. |

| Reliability & Consistency | The robot behaves predictably and its systems work without frequent errors or downtime. | Robot follows the same morning routine (greeting, vital check) without fail, and has minimal technical glitches over months of use. |

| Communication Ability | Clarity in conveying information; good listening and appropriate responses to the user’s needs. | Robot speaks in a clear voice, repeats or rephrases if the senior doesn’t understand, and responds relevantly to questions. |

| Empathy & Benevolence | Showing care, respect, and positive intentions toward the older adult (perceived kindness). | Robot uses a gentle tone, offers encouragement (“I’m proud of you for exercising today!”), and remembers to ask about the senior’s well-being. |

| Companionability | The robot’s ability to be a pleasant companion and engage in friendly social interaction. | Robot tells a joke or asks about the user’s favorite music, creating moments of companionship rather than just issuing tasks. |

| Physical Design & Comfort | The robot’s appearance, tactile materials, and movements that affect how safe and comfortable it feels. | Robot has a soft exterior and moves at a slow, non-startling pace when near the user, making it less intimidating to accept in personal spaces. |

| Transparency & Trustworthiness | The degree to which the robot’s actions and decisions are understandable and honest. | Robot explains why a blood pressure check is needed and admits if it doesn’t have an answer, rather than staying silent or bluffing. |

| User Control & Autonomy | Allowing the older adult to influence or override the robot’s operations, preserving the person’s sense of control. | The senior can reschedule a reminder or tell the robot “no thank you” to a suggested activity without conflict, establishing the robot as a helper on their terms. |

Table: Major factors influencing trust in eldercare robots, as identified by older users and researchers. A trustworthy robot must blend professional competence with personal warmth, communicate clearly, and operate transparently while respecting the user’s autonomy.

These factors often interact in complex ways. For instance, a friendly humanoid appearance (anthropomorphism) might raise initial trust, but if not backed by competent behavior, trust can quickly collapse – highlighting the importance of consistency between factors. Moreover, different individuals prioritize different factors: some older adults might care most about a robot’s reliability and be relatively indifferent to its personality, while others might need the robot to show empathy and companionship to truly accept it.

Understanding these elements provides a foundation. Next, we delve into specific strategies by which robots can actively cultivate trust. We group these strategies into two categories: narrative techniques (how the robot communicates and the stories it tells) and behavioral techniques (how the robot acts, including nonverbal cues and interactive behaviors).

Building Trust Through Narrative Communication

“Narrative” in this context refers to the way a robot uses language and storytelling during interactions. How a robot communicates – the content, style, and relatability of its dialogue – can significantly influence an elder’s trust. Humans often connect and build trust through stories; similarly, robots can employ narrative techniques to create rapport, explain their actions, and persuade gently. Here are some narrative-based approaches for trust-building:

- Storytelling to Create Personal Connection: Robots can share short stories or anecdotes as a means to bond with the user. For example, a robot might tell a lighthearted story about “a day in its life” or recall “Yesterday, you told me about your garden, and I was thinking about how lovely that story was.” Such narrative sharing can make the robot seem more personable and relatable, rather than a sterile machine. One study had older adults engage in naming and storytelling exercises with a cute social robot, and found that letting seniors assign a name and imagine a background story for the robot led to deeper emotional connections. Participants wove personal memories and cultural references into the robot’s “story,” effectively humanizing it and expressing hopes for it to be emotionally intelligent. This suggests that a robot encouraging the user to reminisce or listen to stories can strengthen their relationship. By telling stories (even fictional ones), a robot can also project a friendly personality – for example, a companion robot might narrate an uplifting tale or a past success story (“I once helped someone recover their strength after surgery by exercising a little each day…”) to inspire the user. These narratives, if done authentically, can elicit empathy and trust, as the user starts to see the robot as having a narrative presence in their life rather than being a gadget.

- Using Narrative for Gentle Persuasion: Stories are a powerful tool for persuasion without feeling pushy. A robot aiming to encourage healthy behavior might frame advice within a narrative context to make it more digestible. For instance, instead of plainly stating facts (“Walking 30 minutes daily improves health”), the robot could say: “Let me tell you about Jane – she’s 78 and was hesitant to exercise, but she started taking short walks and found she could play with her grandkids longer. This is why even a small walk each day can be wonderful.” This approach wraps the advice in a relatable story that can inspire and build trust in the advice’s relevance. Research in HRI has shown that a storytelling robot can be more persuasive, especially if combined with appropriate social cues. In one experiment, a robot told participants a moral story and those who heard it delivered with engaging narrative and proper eye contact were more influenced by the message. The narrative format likely lowered their guard and increased identification with the content, suggesting that older adults might similarly respond to health advice embedded in a little story or example. The narrative should be kept concise and clearly tied to the user’s situation for maximum effect.

- Relating to the User’s Own Life Story: Older adults have rich life histories, and a robot that acknowledges or incorporates elements of the user’s personal narrative can gain trust. This could involve the robot asking about the senior’s past experiences or values, and then referencing them in future conversations. For example, if a user once worked as a chef, the robot might say during a dietary suggestion, “I know cooking has been a big part of your life. Perhaps we can try a healthy recipe together, drawing on your expertise in the kitchen.” By doing so, the robot validates the person’s identity and shows respect for their story, which can create a sense of partnership. Researchers note that involving older adults’ personal narratives in the design of robot interactions helps ensure the robot’s advice aligns with the person’s values and habits. In other words, the more the robot’s “narrative” fits into the user’s own narrative of their life, the more trust and compliance it can foster. It feels less like an outsider imposing and more like an advisor who understands “where I’m coming from.”

- Maintaining a Consistent Persona and Backstory: Some advanced robots are programmed with a persona or backstory which they can share with the user over time. For example, a robot might have a friendly character profile like “I’m Joy, a home healthcare robot who loves helping people stay active. I was created by a team of doctors and engineers.” By sharing bits of this narrative and keeping it consistent, the robot provides the user with a stable sense of “who” the robot is. Consistency here is important: if one day the robot says it’s shy and the next day it behaves brashly with a conflicting story, trust can erode. But a coherent narrative persona can help some users treat the robot more like a trustworthy assistant with an identity, rather than a faceless device. This technique must be used carefully to avoid deception – the narrative should be light and clearly fictional if it goes beyond factual info (users generally understand the robot didn’t literally have childhood, etc.). The goal is not to trick the senior, but to give a conversational continuity that makes interactions more engaging. Emotional storytelling can also be part of this – for instance, a robot might “share” how it feels about certain tasks (“It makes me happy when I see you feeling better after our exercise sessions.”) to build an empathic bond. Indeed, experiments where a robot disclosed a personal “sad story” found that users responded with empathy and some even consoled the robot, indicating an emotional two-way connection was formed. This kind of mutual empathy narrative can strengthen the human-robot relationship, though designers should remain ethical about the extent of quasi-personhood given to the robot.

- Explanatory Narratives & Transparency: One of the most practical narrative strategies is using clear explanations or micro-narratives to accompany the robot’s actions. When the robot provides health advice or requests the user to do something, framing it as part of a story or reason can enhance understanding and trust. For example: “It’s time to take your blood pressure. Last week, it was a bit high on a few evenings, so I’d like to check it now to ensure it’s in a good range. That way, we can make sure your new medication is working properly.” In this explanation, the robot references a timeline narrative (last week to now) and a rationale (ensure medication works), which helps the user follow the logic. Such narrative context can reduce the feeling of arbitrary commands, making the user more comfortable complying. Another scenario: if the robot cannot fulfill a request, a narrative explanation like “I’m sorry I can’t find that information right now. My internet connection is down, but I’ll try again in a few minutes” is far better for trust than a silent failure or a generic error. It tells a short story of what went wrong and what will happen next, keeping the user informed. Transparency through narrative has been emphasized as vital for trust in AI-driven systems, so that users aren’t left guessing the robot’s motives.

- Cultural and Ethical Framing: Narratives can also help navigate sensitive cultural or ethical issues. For instance, if an older adult is from a culture that values deference to elders, a robot might build narrative respect by occasionally asking the user to share wisdom or stories from their life (“You have so much experience; I’d love to hear your advice on…”). This reverses roles momentarily and shows the robot “respects” the elder’s narrative, which can increase trust, as the elder doesn’t feel the robot is always in the telling position. Ethically, when persuading for health, using positive narratives (highlighting success stories, reinforcing the person’s own goals) is preferable to fear-based narratives. A robot should avoid overly negative storytelling (e.g. scaring the user with a story of someone who suffered by not taking medication) because while it might spur compliance short-term, it can hurt trust and cause distress. Instead, uplifting or empowering stories align better with a caring trust relationship.

In sum, narrative communication humanizes the interaction. It turns one-directional instructions into a conversation that has context and empathy. By carefully incorporating storytelling, personal context, and clear explanations, robotic advisors can move from being seen as mere tools to being perceived as understanding partners. This narrative approach, combined with solid performance, makes the user more likely to trust the robot and heed its counsel. Of course, narratives alone are not enough – they must be backed by the robot’s observable behavior and abilities, which we discuss next.

Building Trust Through Consistent and Empathetic Behavior

While words and stories matter, actions often speak louder. Behavioral trust-building focuses on what the robot does – how it physically interacts, responds nonverbally, and demonstrates its reliability and care through actions. For older adults, the robot’s behavior can either reassure them of its trustworthiness or raise red flags. Key behavioral strategies include:

- Socially Expressive Cues (Eye Contact, Gestures, Body Language): Humans intuitively gauge trust through body language and eye gaze, and similar principles apply to robots. A robot that can make appropriate eye contact (or the equivalent with sensors/cameras) when “listening” to a person can make the person feel acknowledged and respected. Experiments have shown that a robot maintaining eye gaze with a listener while speaking can increase the listener’s engagement and trust in what’s being said. Similarly, gestures that align with speech – such as nodding to signal understanding, or open-arm gestures to appear welcoming – contribute to a robot’s warmth and credibility. For example, if an older adult shares a concern (“I feel a bit dizzy today”), a robot that turns towards them, perhaps tilts its head sympathetically and says “I’m sorry to hear that” while nodding slightly, communicates attentiveness and empathy nonverbally. In contrast, a static robot with no movements or one that swivels randomly can seem aloof or unresponsive. Research on socially assistive robots for seniors found that participants commented most on the robot’s nonverbal behaviors like eye contact and body orientation as critical for making the robot persuasive and likable. The presence of these cues triggers a more human-like social response, making it easier for the older person to trust the robot’s intentions. Notably, combining multiple cues is important: one study noted that a robot’s gestures only improved persuasion when accompanied by proper gaze; disjointed gestures without eye contact actually confused participants. This underscores that behaviors must feel natural and well-coordinated.

- Emotional Expression and Empathic Feedback: Beyond neutral social cues, a robot’s ability to display or respond to emotions appropriately is crucial in eldercare. An older adult is more likely to trust a robot that seems to understand and care about their emotional state. Behavioral examples include the robot modulating its tone of voice to convey concern when the user is upset, “smiling” (if it has a face or LED expressions) when the user is happy or when giving praise, and perhaps mirroring the user’s mood to some extent (within believable robotic limits). Even simple actions like a gentle pat on the shoulder or a light touch on the hand (if the robot is equipped for safe physical contact) can have a strong positive effect – physical therapists note that appropriate touch can build trust by conveying comfort. In a study involving a small humanoid caregiver robot, older participants who experienced the robot touching their arm for a health check (like simulating taking pulse) found it reassuring and showed higher trust, as the touch was perceived as a caring gesture. That said, touch is very personal; the robot must have user consent and cultural awareness (some elders may prefer no touch at all). Empathic behavior also means responding to the content of what the senior says. If a user complains “I’m really tired today,” a trust-building robot might physically slow its movements and say softly, “I understand, let’s take it easy.” Recognizing and validating feelings helps the older adult feel seen by the robot, enhancing trust that “this robot understands me.” Field trials with socially assistive robots (like the furry seal PARO and conversational agents like Elliq) have reported that when robots appeared sensitive to users’ moods – for instance, playing calming music when the user was anxious or saying encouraging words when the user was down – the seniors developed greater trust and affection for the robot. Conversely, if a robot plows ahead with its agenda ignoring the user’s emotional cues, it can come across as uncaring and diminish trust.

- Dependability and Consistency in Actions: Reliable behavior over time, as touched on earlier, is demonstrated through consistent routines and dependable performance. This includes the robot being punctual with reminders, accurate in its sensing (e.g., not frequently false-alarming a fall or missing one), and stable in operation (not crashing or needing constant reboot). Each day of smooth operation strengthens trust: the older adult learns they can count on the robot at certain times for certain tasks. One key behavioral aspect is error handling. A completely error-free robot is unrealistic, but how a robot handles mistakes can actually build trust if done well. For example, if the robot fails to understand a request, a good behavior is to apologize and try again or offer a workaround (“Sorry, I didn’t catch that. Could you repeat a bit slower?”). If the robot gives an incorrect piece of information and later corrects it (perhaps after a cloud update), it should acknowledge the error: “I need to correct myself: Yesterday I said your appointment was at 3 PM, but it’s actually at 2 PM. I apologize for the confusion.” Such honesty in behavior can preserve and even repair trust because it shows the robot is aware of its actions and accountable, rather than pretending nothing happened. Users tend to forgive occasional errors if the system is transparent and works to fix them, a process known as trust repair in human-robot interaction. On the other hand, if the robot’s behavior is erratic – say it sometimes fails to give a reminder with no explanation – the inconsistency will plant seeds of doubt (“Can I really rely on it?”), reducing both trust and compliance.

- Adapting to the User’s Pace and Preferences: A trustworthy robot pays attention to the user’s behavior and adapts accordingly. Older adults vary widely in their hearing, vision, cognitive speed, and comfort with technology. Robot behavior should be adaptive – for instance, speaking slower and louder if a user frequently asks “What?” or moving out of the way if the user is unsteady on their feet and approaching. Adaptivity also means personalizing routines: if the robot notices the user consistently ignores a reminder at 7 AM but responds at 8 AM, it might adjust its schedule. By adapting, the robot demonstrates attentiveness, a quality highly valued in caregiving. It shows the robot “learns” from the senior, which can impress and reassure them that the robot is truly there to help in a personalized way. However, adaptivity should be communicated (tying back to narrative transparency) – for example, “I’ve noticed you prefer walking in the afternoon, shall I remind you later instead of this morning?”. This invites the user’s input and signals that the robot is not rigid. Studies in assistive robotics suggest that responsiveness to user input – whether verbal or via sensors – is a cornerstone of building rapport and trust, especially in long-term interactions. In fact, ongoing research is exploring cognitive models and AI (like reinforcement learning or user modeling) for robots to tailor their behavior over weeks and months for each individual senior, precisely to maintain engagement and trust as the novelty wears off.

- Physical Approachability and Comforting Presence: The way a robot positions itself and moves in the elder’s environment directly affects trust. Approachability involves respecting personal space – not startling the user by coming too close or moving too fast. Many eldercare robots have been programmed to maintain a modest distance unless invited (e.g., not rolling right up behind someone silently). When moving, a gentle whir with some audio cue is better than completely silent gliding, which can spook people. If the robot has a face display, keeping it oriented toward the person when conversing (but not staring constantly) shows attentiveness. A simple behavior like turning toward the person when they speak to the robot is essential – if the robot faces away or continues doing something else, the person will feel ignored and trust declines. Additionally, physical assistance behaviors need to inspire confidence: if a robot is helping someone stand up or fetch an item, its motions should be steady, slow, and well-coordinated. Any shaky or abrupt motion in such scenarios can be very scary for an older adult (imagine a robot jittering while lifting a senior’s arm – trust would vanish). Engineers test these assistive behaviors extensively for smoothness and fail-safes to ensure the user feels safe. When older adults see that, for example, a robotic arm can reliably lift a cup to their lips without spilling tea on them day after day, they develop trust in the mechanical reliability and start to relax their guard during those interactions. In contrast, if the first experience is a spill or a minor collision with furniture, they may remain anxious thereafter whenever the robot moves near them.

- Positive Reinforcement and Encouragement: The robot’s behavioral response to user’s compliance can also reinforce trust and future compliance. If an older adult follows the robot’s suggestion (like completing a rehabilitation exercise), the robot offering praise or positive feedback is a beneficial behavior. A simple clap animation or verbal “Well done! I can tell that was not easy, but you did great,” can give the user a sense of accomplishment and that the robot genuinely cares about their progress. This not only rewards the compliance but also builds an emotional bond (the user might delight in pleasing the robot, a phenomenon observed in some elder-robot studies where seniors did tasks partly “for the robot’s sake” because they felt it expected it of them kindly). Praise has been identified as an effective strategy in persuasive robotics for health; it must be sincere and proportionate to avoid feeling patronizing. Along with praise, showing patience is key: if a user is slow to comply or is having difficulty, the robot should not display impatience (never sighing or saying “you should do better”). Instead, encouraging behavior like offering help (“Would you like me to guide your hand?”) or breaking the task into smaller steps can maintain trust even when the user struggles. The user then knows the robot won’t “give up” on them or get annoyed – a foundation for a trusting partnership.

- Discrete and Privacy-Respecting Behaviors: In eldercare, many activities can be intimate or sensitive (hygiene, health monitoring). A robot should behave in ways that respect privacy and personal boundaries to retain trust. Behaviorally, this could mean averting its gaze or camera when the user is in a state of undress (if the robot helps with dressing or bathing, perhaps it only actively scans the immediate task and doesn’t “stare” elsewhere). It might also mean securing data by not broadcasting it – e.g., if measuring weight or vitals aloud, ensuring no one else is around if the user might be embarrassed by the numbers. If the robot is about to do something that could be sensitive, it should ask permission or at least inform the user: “I need to lift your pant leg a bit to check the swelling on your ankle, is that okay?” This echoes the behavior of a thoughtful nurse and can greatly increase an elder’s comfort level. When users sense that the robot “knows its place” and won’t violate their dignity or confidentiality, they are more likely to trust it with personal matters. On the contrary, any behavior that seems intrusive (roaming into the bedroom uninvited, accessing personal drawers) can seriously breach trust. Thus, careful programming of spatial and social boundaries – essentially etiquette – is an important part of robot behavior design.

In summary, a robot earns trust not just by what it communicates, but how it behaves. By mimicking many of the positive nonverbal attributes of a good human caregiver (attentive eye contact, gentle tone, steady assistance, respect for boundaries) and by demonstrating technical reliability and adaptivity, a robotic advisor can prove itself to an older user over time. The ultimate goal is for the user to feel safe, understood, and supported in the robot’s presence – which will naturally lead to them following the robot’s guidance as they would a trusted nurse or family member’s advice.

Trust in Sensitive Eldercare Contexts

Eldercare often involves deeply sensitive situations – both physically and emotionally. It’s important to address how trust is built or challenged in these contexts, as they require special care:

- Intimate Physical Care (e.g. Bathing, Dressing): These are tasks that can make anyone feel vulnerable, and perhaps even more so for older adults who may be sensitive about needing help. If a robot is to assist with a personal task like bathing, trust needs to be extremely high. Many seniors initially express discomfort at the idea of a machine handling such private care. To build trust here, the robot must prove its competence and gentleness gradually. One strategy is starting with less intimate tasks – for example, a robot might first help by fetching towels or monitoring water temperature (building trust in its helpfulness and safety), then later progress to more direct assistance like gently washing an arm, once the user is more comfortable. Throughout, the robot’s behavior and narrative should emphasize respect and privacy: it could play soft music or give the user verbal privacy (“I’ll give you a moment, just call me if you need help washing your back”). The robot should also be very clearly non-judgmental – older adults often fear being judged for their frailty or body by human caregivers; a robot that simply and efficiently helps, perhaps even complimenting the user (“You’re doing great.”), can create a safe space. A qualitative study found that older persons would consider trusting a robot in bathing if it were highly reliable and made them feel secure, but they still valued traits like respectfulness and preserving dignity as much as technical skill. Therefore, combining skilled performance with a tactful, patient approach builds trust in these intimate tasks.

- Cognitive and Memory Support: For seniors with mild cognitive impairment or early dementia, trust takes on a different nuance. They may not fully understand what the robot is, or they might forget instructions easily. Here the robot must be exceedingly patient and repetitive without frustration. Consistency is comforting for those with memory issues – the robot might re-introduce itself regularly (“Hi, it’s me, your helper robot. I’m here to remind you about your medications.”) in case the person forgets. Using a familiar narrative every day (like a short greeting ritual or a tune) can create a sense of stability. These users might also be more prone to overtrust or anthropomorphize – e.g., believing the robot is alive or a friend. That can help with compliance (they might obey a “friendly robot” where they wouldn’t take a generic pill reminder seriously), but it raises ethical questions about deception. Ideally, the robot should not explicitly reinforce false beliefs; rather it should focus on comforting and guiding behavior. Paro the robotic seal, for example, is used in dementia care to calm patients through pet-like behavior, and patients often trust and respond to Paro as if it were a living pet, which improves their mood and cooperation in care. This is a form of trust built on emotional comfort. The takeaway is that for cognitively impaired elders, trust-building focuses on repeated positive interactions, simple language, and gentle demeanor. Importantly, for compliance, the robot’s reminders may need to be paired with visual or auditory cues (like flashing lights, or a specific chime) that become associated with tasks in the person’s mind since memory is at play.

- Medical Decision-Making Advice: If a robot provides advice on serious medical decisions (e.g., whether to take a certain treatment or to follow a certain diet for a condition), trust is critical, but also seniors might inherently be skeptical or desire human confirmation. In such scenarios, robots should augment, not replace, clinician advice. The trust in the robot might come from it being seen as an extension of the medical team. For example, a robot might say, “Based on Dr. Smith’s instructions, I advise you to measure your blood sugar now.” Mentioning the doctor creates a narrative link to a trusted human authority, bolstering credibility. Without that, an older adult might rightly think, “Why should I trust you over my doctor or my own intuition?” For compliance in these high-stakes decisions, the robot could present itself as a knowledgeable assistant: citing sources or protocols (“The home care guideline recommends you exercise to improve circulation, so let’s try that.”). Still, many older adults will want a doctor or nurse’s validation for major changes; a good robot should encourage consultation and not appear offended if the user cross-checks (“Yes, please discuss this with your doctor. I can help summarize your data for them.”). By facilitating involvement of healthcare professionals, the robot can actually increase the user’s trust that it’s not a rogue AI but part of a care team. Additionally, ethical behavior like never giving advice outside its scope (e.g., not diagnosing new symptoms, but instead saying “I’m not qualified to diagnose that; we should contact a doctor”) is paramount. This humility in behavior assures the user that the robot is conscious of its limits, which reinforces trust that what advice it does give is within safe bounds.

- Emergency and Risk Scenarios: Trust is truly tested during emergencies – say a fall, a sudden illness, or a dangerous situation like leaving the stove on. If a robot is present, the older adult needs to trust it to respond appropriately (call for help, provide guidance). Behaviorally, a robot that has proven itself in small ways may earn the opportunity to be trusted in a crisis. For example, if the robot calmly helps the user when they stumble slightly (perhaps rolling over and bracing them or promptly alerting a caregiver), the user will remember that in a bigger emergency. A trustworthy emergency response involves the robot acting swiftly but also keeping the user informed: “I detected a fall. I’ve called your daughter and an ambulance is on the way. Please remain still, and I will stay with you.” This combines practical action with a reassuring narrative. Knowing the robot will not “panic” and will do the right thing is a huge factor in overall trust – it can be literally life-saving compliance (like staying calm and listening to the robot’s instructions after a fall, rather than trying to get up alone). However, false alarms or overreactions can undermine trust (the boy-who-cried-wolf effect). If a robot calls an ambulance when none was needed, the older adult might be upset and less likely to heed real alarms. Thus, fine-tuning the sensitivity of such systems and possibly involving the user in confirmation (“It seems you fell. Should I call for help?” if the person is conscious and able) can preserve trust.

- Emotional Support and Loneliness: Many older adults face loneliness, and robots are being introduced as companions to chat, play games, or connect them with others. Trust in this realm means the person feels emotionally safe with the robot – they can share feelings or spend time without fear of ridicule or harm. The robot’s behavior as an emotional supporter should involve active listening (like repeating or paraphrasing what the user says to show it’s listening), offering consolation or positivity, and importantly, keeping confidences. If a senior tells the robot something personal (e.g., “I miss my late husband terribly today”), the robot should respond supportively and not broadcast that to others without permission. The user needs to trust that their emotional openness with the robot remains in that space, similar to how one trusts a friend or therapist. This raises data privacy issues: any recorded conversations must be secure. Narratively, the robot can remind the user that it keeps their stories private (“I’m here to listen. Anything you share with me stays between us unless you want me to tell someone to get you help.”). When robots successfully provide comfort, users often anthropomorphize them as friends. This trust can greatly improve quality of life – in New York, a program giving socially assistive tabletop robots to hundreds of seniors found reduced loneliness and high engagement, with many users developing a daily routine of chatting with their robot about news or memories. One user in that program said having the robot “was like having a granddaughter visit daily,” illustrating the level of trust and companionship that can develop. Designers must cherish that trust and ensure the robot doesn’t inadvertently violate it (for example, by having a bug that shares personal info in front of family, which could embarrass the user).

- Cultural Sensitivity: Trust can also be influenced by cultural norms. In some cultures, elders might be predisposed to trust authoritative figures, which could extend to a very formal, professionally speaking robot. In others, a more humble, deferential robot persona might be trusted more. The narrative and behavior should ideally be adjustable to the user’s cultural expectations. For instance, forms of address (using last name vs. first name, honorifics like “Sir/Madam” vs. a casual tone) can matter in showing respect. A culturally inappropriate behavior or joke could quickly break trust. Thus, understanding the elder’s background (maybe asking about their preferences explicitly) and customizing the interaction style is wise. Some advanced robots allow caregivers to set certain cultural parameters or the robot can learn by trial and error what the person responds well to. In any case, one-size-fits-all could lead to missteps in sensitive scenarios.

Ultimately, in sensitive contexts, earning trust is a gradual process. Many experts suggest introducing robotics into eldercare in stages – first building familiarity (low-stakes interactions) and then increasing responsibilities as trust grows. During this, feedback from the older adult is critical: they should feel empowered to say what makes them uncomfortable or what they need, and the robot or the care team should adjust accordingly. Trust is highly individual, especially in personal care; listening to each elder’s concerns and boundaries will guide the robot’s narrative and behavior adjustments.

Ethical and Practical Challenges in Fostering Trust

Designing robotic advisors that older adults trust and willingly follow is not without challenges. Some major ethical and practical considerations include:

- Avoiding Over-Persuasion and Manipulation: While we want seniors to comply with healthy behaviors, it’s important that trust-building does not cross into unwelcome manipulation. Techniques like storytelling and praise should be genuine and not coercive. There’s a fine line between nudging someone for their own benefit and unduly influencing a vulnerable person’s decisions. Ethicists argue that robots must respect the autonomy of older adults, obtaining consent for major interventions and not tricking them with false promises. For instance, using a fictional narrative to persuade (like a made-up success story) could be considered manipulative if the user believes it to be factual. Robots should strive for ethical persuasion, meaning they encourage positive action through honest means (empathic reasoning, factual stories, user’s own values) rather than fear, guilt, or deception. Transparency is key: if the robot has a goal (like increasing exercise), it can be open about it – “I suggest these walks because I want to help you stay strong” – rather than hiding its intent. This respects the user’s right to know and fosters trust in the robot’s integrity.

- Trust Calibration: As noted, too much trust (over-trust) or too little trust (under-trust) are both problematic. One challenge is ensuring the user has a correct mental model of the robot’s abilities. Older adults who are not technologically savvy might either underestimate the robot (“It probably can’t do much”) or overestimate it (“It’s a smart machine, it must know everything”). Developers need to calibrate this by education and by the robot’s own explanations. For example, the robot might explicitly say during setup, “I can remind you of medications and answer some common questions, but I don’t have medical training like a doctor.” Also, using clear cues when the robot is unsure or when it’s switching modes helps—if it connects to an online source for info, maybe it says so. Proper calibration leads to appropriate compliance: the user will follow the robot’s advice when it makes sense and seek human help when the robot signals to do so. Over-trust could lead to dangerous compliance, like taking a medication dosage from the robot that was meant to be adjusted by a doctor. Under-trust could mean ignoring even correct reminders. Continuous user education and periodic trust check-ins (perhaps asking the user if they are comfortable with what the robot is doing, or encouraging them to ask “why” if they are unsure) can mitigate miscalibration.

- Privacy Concerns: Trust can be easily broken if a robot is perceived to be violating privacy. Many eldercare robots have cameras, microphones, and collect health data. It’s essential to have robust data protection and to communicate to users (and their families) what data is collected, where it goes, and who can access it. Older adults might worry, “Is it recording me all the time? Who can hear our conversations?” If those worries linger, they may not fully engage (e.g., they might avoid talking to the robot about personal issues). Clear privacy policies and on-device processing for sensitive data can help. Some systems have a physical privacy mode (like the robot’s camera eyes literally close or turn away when asked). Building trust here means showing the user that their information is safe – be it health metrics or personal stories. Given the increasing use of cloud-connected AI (like language models) in robots, encryption and strict access control are mandatory to prevent data leaks. Additionally, families and care providers should also earn the senior’s trust: if the robot reports data to a caregiver app, the senior should know and consent. Ethical frameworks for social robots call for treating the older person’s data with the same confidentiality as medical records.

- Transparency vs. Friendliness: There is sometimes tension between being transparent (e.g., admitting limitations) and maintaining a friendly persona (which might tempt designers to gloss over limitations). For trust, transparency should win out. If the robot doesn’t understand something, it should not just give a random response to appear competent – that could mislead the user. Large Language Model (LLM)-driven robots, for instance, can be very conversational and friendly but may sometimes hallucinate incorrect information. This is dangerous in healthcare advice. Developers are working on grounding LLM responses in verified health databases to prevent this. Until perfect, one approach is the robot providing confidence indicators: e.g., “I think your question might be about nutrition, but I’m not entirely sure. Let me clarify or find out.” This level of candor actually boosts trust because the user learns that when the robot does give a clear answer, it’s more likely reliable. It’s better for a robot to say “I don’t know” than to say something wrong confidently. This honesty is especially important for eldercare, where misinformation can have real harm.

- Emotional Attachment and Dependency: An ethical concern is that if a robot does its job very well, an older person might become too attached or dependent on it for emotional needs. While reduced loneliness is a positive outcome, there have been cases where seniors, for example, refuse to be separated from a companion robot or treat it as their primary confidant. Problems arise if the robot is taken away (e.g., it breaks or the program ends) – the person might experience grief or isolation again. Also, an unscrupulous design might exploit attachment (like getting the person to do things by saying “Do it for me, or I’ll be sad”). Ensuring a healthy relationship dynamic is important: the robot should ideally encourage human-human interactions too (like reminding the user to call family, or happily stepping aside when family visits). The goal of the robot is to supplement, not completely replace, human contact in eldercare. Striking that balance – providing substantial companionship without displacing human bonds – is tricky. Careful scenario planning and perhaps limiting some social capabilities (so the user doesn’t, say, decide to will their estate to the robot as has been jokingly feared) are part of ethical design.

- Bias and Personalization Limits: Robots might inadvertently carry biases in their programming or learned behavior. For example, a health advisor robot might have been trained on data that doesn’t account for certain age-related issues or cultural practices, leading to biased advice. If an older adult senses that the robot “doesn’t get their reality” (like it keeps recommending jogging to a 90-year-old with arthritis because that was in a generic fitness database), trust erodes. Continuous evaluation with diverse user groups is necessary to weed out such biases. Also, while personalization is good, there is a limit to how finely a commercial robot can tailor to each individual. Not every senior finds the same jokes funny or responds to the same motivational approach. If a robot tries a one-size-fits-all style, it will click with some and not with others. So practically, either these systems need robust customization (perhaps a setup questionnaire or learning phase) or we need to accept partial adoption (some elders will love it, some won’t). Managing those expectations is important for care providers deploying robots: not everyone will equally trust or bond with the robot, and alternative solutions should remain available for those who don’t.

- Interdisciplinary Collaboration: Building trust is not just a technical endeavor; it requires input from psychologists, gerontologists, ethicists, and the older adults themselves. One challenge is ensuring that the voices of seniors are included in the design and testing of these robots. Participatory design methods (direct feedback, co-design sessions) have proven valuable. In one case, older adults contributed their personal stories and preferences during the robot’s development, leading to features that directly reflected their trust concerns. This kind of collaboration ensures the end product respects and resonates with actual user values, not just engineers’ assumptions.

- Regulatory and Safety Compliance: On a practical note, healthcare robots must comply with safety regulations and sometimes medical device standards. This involves rigorous testing and certification. A robot might need approval for contact with skin, mobility in homes, etc. Ensuring fail-safe behaviors (like shutting down safely if something goes wrong) is part of this. From the user’s perspective, knowing that a robot is approved by relevant authorities (maybe the robot can even communicate that it’s certified for home use) can increase trust. Compliance with standards is also tied to liability: if a robot gives health advice, who is responsible if something goes wrong? Ethical deployment means having clear guidelines so the trust placed in the robot is backed by accountability from manufacturers or care organizations.

Addressing these challenges is key to sustaining trust in the long run. It’s not enough to get an older adult to trust a robot on day one; the trust must endure over months and years of use and through various life events. Each ethical misstep or broken promise can undo progress. Therefore, those creating and deploying robotic advisors are urged to prioritize trustworthiness at every level – from hardware safety to software honesty to user education.

Conclusion

Trust and compliance are the twin pillars upon which the success of robotic advisors in eldercare rests. As we have explored, building trust with older adults is a holistic process. It involves thoughtful communication – where narrative techniques like storytelling, explanation, and personalization make interactions meaningful – and it involves observable behavior – where consistency, empathy, and reliability in actions prove the robot’s dependability. When a robot achieves this trust, older adults are more likely to welcome it into their lives and follow its health guidance, leading to better compliance with medications, exercises, and other beneficial routines. In turn, this can contribute to tangible improvements in health outcomes, safety, and emotional well-being for seniors.

Robotic advisors capable of engaging narratives and compassionate behaviors can transform the caregiving landscape. Imagine a future where an older person living alone considers their robot not just as a device, but as a trusted companion – greeting them each morning with a friendly story or joke, guiding them gently through rehab exercises with encouragement, keeping track of their health needs, and standing by them during distress with calm reassurance. In such a scenario, technology and empathy merge to provide care that is scalable (addressing caregiver shortages) yet deeply personal. We are already seeing the beginnings of this: from social robots that reduce loneliness in care homes, to health advisor robots that older adults heed much like they would a human dietitian or coach.

However, this promising future comes with the responsibility to uphold ethical standards. Trust, once earned, should not be betrayed. Developers and caregivers must ensure these robotic systems are transparent, respect autonomy, and protect user dignity and privacy at all times. Regular updates, user training, and oversight can help maintain the delicate balance where the older adult feels in control of their relationship with the robot. It’s also crucial to continuously gather feedback from seniors: trust dynamics may change as users age further or as their health status evolves, and robots will need to adapt accordingly.

In the coming years, advancements in AI – particularly large language models and emotionally intelligent systems – are expected to give robots even more human-like conversational abilities and adaptability. This could supercharge their capacity to build rapport, as robots will converse more naturally and respond to subtle cues. We might see robots that can detect an elder’s mood from facial expressions or tone of voice and automatically switch their interaction style (offering a comforting narrative on a sad day, or a fun engaging activity on a bored day). With such power, ensuring trustworthiness becomes both easier (because interactions feel natural) and harder (because over-trust and anthropomorphism risks increase). Thus, guiding principles and perhaps even regulations will be needed to keep developments aligned with older adults’ best interests.

In conclusion, establishing trust and fostering compliance in robotic advisors for healthcare is not just about making robots more advanced – it’s about making them truly user-centered and compassionate. By weaving together narrative and behavior in a manner that resonates with the human heart, engineers and caregivers can help older adults view robots not as strange gadgets, but as helpful partners. When an elderly person can say, “My robot understands me and I trust it to help me stay healthy,” we will have unlocked a powerful ally for aging with dignity and support. The journey to that point is well underway, and with careful attention to trust and ethics, robotic caregivers can indeed become a trusted fixture in elder healthcare, improving the quality of life for our seniors while respecting their humanity.

References

- Giorgi, Ioanna, et al. “I Am Robot, Your Health Adviser for Older Adults: Do You Trust My Advice?” International Journal of Social Robotics, vol. 17, 2023.

- Stuck, Rachel E., et al. “Older Adults’ Perceptions of Supporting Factors of Trust in a Robot Care Provider.” Journal of Robotics, 2018.

- “Trust and Compliance: Robotic Advisors for Older Adults in Healthcare.” Frontiers in Robotics and AI, 2024.

- Menkovski, Vanja, et al. “Enhancing Human-Robot Interaction in Healthcare: A Study on Nonverbal Communication Cues and Trust Dynamics with NAO Robot Caregivers.” arXiv preprint, 2025.

- Cheng, Peggy, and Raymond Kai-Yu Lo. “Naming and Storytelling in Social Robot Interactions: A Narrative Analysis of Engagement and Personal Connections.” Innovation in Aging, vol. 7, 2023.

- Wilson, Emily, et al. “Personal Narratives in Technology Design: The Value of Sharing Older Adults’ Stories in the Design of Social Robots.” Frontiers in Psychology, vol. 12, 2021.

- “Furhat Robot Enhances Cognitive Therapy for Older Adults (LeadingAge Article).” LeadingAge, 2023.

- Heerink, Marcel, et al. “The Influence of Socially Intelligent Robot Interface on the Acceptance of Healthcare Robots by Older Adults.” International Journal of Social Robotics, vol. 2, no. 4, 2010.

- Getson, Michelle, and Goldie Nejat. “Persuasive Socially Assistive Robot Behaviour for Promoting Sustained Engagement in Cognitive Interventions among Older Adults.” arXiv preprint, 2024.

- Ham, Jaap, and Janneke van der Bijl-Brouwer. “Evaluating the Persuasive Power of a Storytelling Robot that Uses Gaze and Gestures.” International Conference on Social Robotics, Springer, 2015.

- Stafford, Rebecca Q., et al. “I Can Relate to It: Older Adults’ Perspectives on Emotional and Social Robotics in Aged Care.” Frontiers in Psychology, vol. 14, 2023.

- Brink, Kelly. “So, a Robot Walks into a Nursing Home…” Axios, 8 July 2022.

- Vankatesh, Sandhya Ramesh. “ELLiQ Robot: The AI-Enabled Chatty Companion for the Elderly.” Interesting Engineering, Dec. 2022.

Get the URCA Newsletter

Subscribe to receive updates, stories, and insights from the Universal Robot Consortium Advocates — news on ethical robotics, AI, and technology in action.

Leave a Reply